Deep Learning for Language Modeling & Generative AI Applications

Generative AI is at the forefront of technological innovations, with applications across diverse industries like healthcare, retail, sales, legal, and entertainment. At the core of this revolution are deep learning techniques that power large language models (LLMs), enabling the development of sophisticated AI-driven applications.

This 10-day workshop provides a hands-on, computation-focused introduction to deep learning for language modeling and Generative AI. Participants will gain essential skills to build and apply cutting-edge LLMs in real-world scenarios. The workshop balances foundational theory with practical implementation, offering a streamlined yet accessible introduction to key concepts from linear algebra, deep learning, and natural language processing (NLP) through interactive coding sessions. Participants will explore the inner workings of LLMs, experiment with state-of-the-art AI frameworks, and develop innovative solutions in NLP. By the end of the workshop, attendees will be equipped with the knowledge and tools to harness Generative AI for impactful applications across various domains.

Course Content

| Unit name | Manipal School of Information Sciences |

| Month, Year | July 2025 |

| Duration | Two Weeks |

| Attendance mode | Regular |

| Location | Manipal Academy of Higher Education, MAHE |

| ECTS | 3 |

Learning Outcomes

On completion, students will be able to:

- Understand And Implement The Building Blocks Of Deep Learning Models Using PyTorch

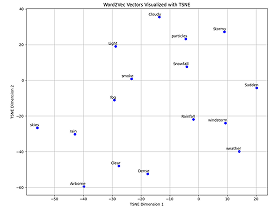

- Apply Word Embeddings For Real-World Problems

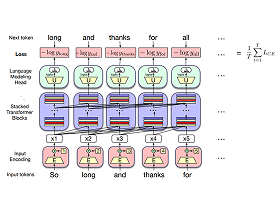

- Understand The Self-Attention Mechanism And The Transformer Architecture

- Explore LLMs With Transformers Using PyTorch And Hugging Face To Solve Real-World Problems

Schedule

Note: meetings will be 3 hours per day and will be hands-on coding sessions using the Python programming language.

| Day | Topic | Learning Outcome |

| Day 1 | Introduction to essential linear algebra for deep learning using PyTorch. Application project: analyzing words in Wikipedia articles using static embeddings. | LO1 |

| Day 2 | The softmax classifier for linear classification and implementation using model subclassing in PyTorch. Application project: sentiment analysis of product reviews. | LO1 |

| Day 3 | Foundations of deep neural networks and implementation using model subclassing in PyTorch. Application project: sentiment analysis of product reviews. | LO1 |

| Day 4 | Vector semantics, word embeddings, and preprocessing raw textual data. Application project: preprocessing and analyzing the Reuters corpus. | LO2 |

| Day 5 | Learning word embeddings using the Word2Vec algorithm. Application project: embedding and visualizing words from the Reuters corpus. | LO2 |

| Saturday Sunday | Weekend – KAIROS 2025 | |

| Day 6 | The self-attention mechanism in language modeling and the transformer neural network Application project: analyzing static embeddings of words from Wikipedia articles and extending them to contextual embeddings. | LO3 |

| Day 7 | Transformer architectures for language modeling using PyTorch and Hugging Face. Application project: load models and their inferences and train models with Hugging Face. | LO3 |

| Day 8 | Large language models with transformers. Application project: sentence classification using the BookCorpus dataset using BERT. | LO4 |

| Day 9 | Pre-training and fine tuning large language models. Application project: build a movie recommendation chatbot. | LO4 |

| Day 10 | Final quiz and review day. | |

| Saturday Sunday | Closure & Departure |

- Essential linear algebra for deep learning

- Fundamentals of linear classification: weights, bias, scores, and loss functions

- Calculus for the gradient descent algorithm

- Forward and backward propagation with regularization

- Batch processing for large datasets

- Linear to nonlinear classification via activation functions

- Computational setup of a shallow neural network

- Tuning neural network performance

- Pre-processing data and batch normalization

- Cross-validation for validating model performance

- Extending the computational setup from a shallow to a deep neural network

- Introduction to the TensorFlow library

- Application projects: implementing shallow and deep neural network models using TensorFlow; implementing machine learning models on edge devices using Edge Impulse.

Pre-requisites

- A Strong Will To Explore And Learn New Computational Ideas

- Prior Experience With Basic Programming Using Python/MATLAB/R/C/C++ To The Extent Of Following And Understanding Pre-Filled Codes Under Instructor Guidance.

Assessment

| Type | Description | Weightage | Date | Mode |

| Class participation | Attendance and active participation in class meetings | 50% | Day 1-10 | In-class |

| Final Quiz | Multiple choice quiz for 30 minutes duration on Day 1-7 topics | 50% | On Day-10 | In-lab, online submission |

References

- A friendly introduction to Deep Learning and Neural Networks by Luis Serrano

- Neural Networks and Deep Learning by Michael Nielsen

- Practical Natural Language Processing, Sowmya Vajjala, Bodhisattwa Majumder, Anuj Gupta, and Harshit Surana, O'Reilly, June 2020

- Speech and Language Processing, Dan Jurafsky and James H. Martin, Pearson; 3rd Edition

Name of the Coordinator

Distinctive Features

- Lab facilities with essential software and internet connectivity.

- Hands-on and fast-paced introduction to the principles of deep learning using Python.

- Solid foundation in the computational components of large language models essential for Generative AI applications.